Selective photo permissions in iOS sucks, actually

A couple of years ago, Apple introduced the idea of selective Photos access. The idea was so that if you let an app have access to specific photos, then the app couldn’t do something like steal all your photo metadata straight out of your camera roll, including location. Great for apps that you didn’t necessarily trust to abuse that access, given that there’s was no way for you to tell if an app had collected all the metadata from all your photos and uploaded it into a database somewhere.

Apple’s solution, introduced with iOS 14 in 2020, was to allow you to select which photos an app has access to, which sounds great in theory, but has become a bit of a usability nightmare.

The problem is that by only selectively allowing an app to access your photos, you’re effectively choosing between two, kinda sucky options.

If you selectively allow access to photos, it means that every time you want to share a new photo to the app, you have to take the photo (using whatever camera app you want, whether that’s the stock camera or something like Halide), jump into the app, hope it has a deep link to the Settings page that allows you to change which photos it has access to (not all apps do, and the implementation to select photos can differ between apps), select the new photos that you just took, and then finally send them using the app. This three-step dance of taking, selecting, and sending happens every single time you want to send a photo using an app that only has selective access to your photos, which adds a huge amount of friction to a process that should be as few steps as possible.

But what’s the alternative?

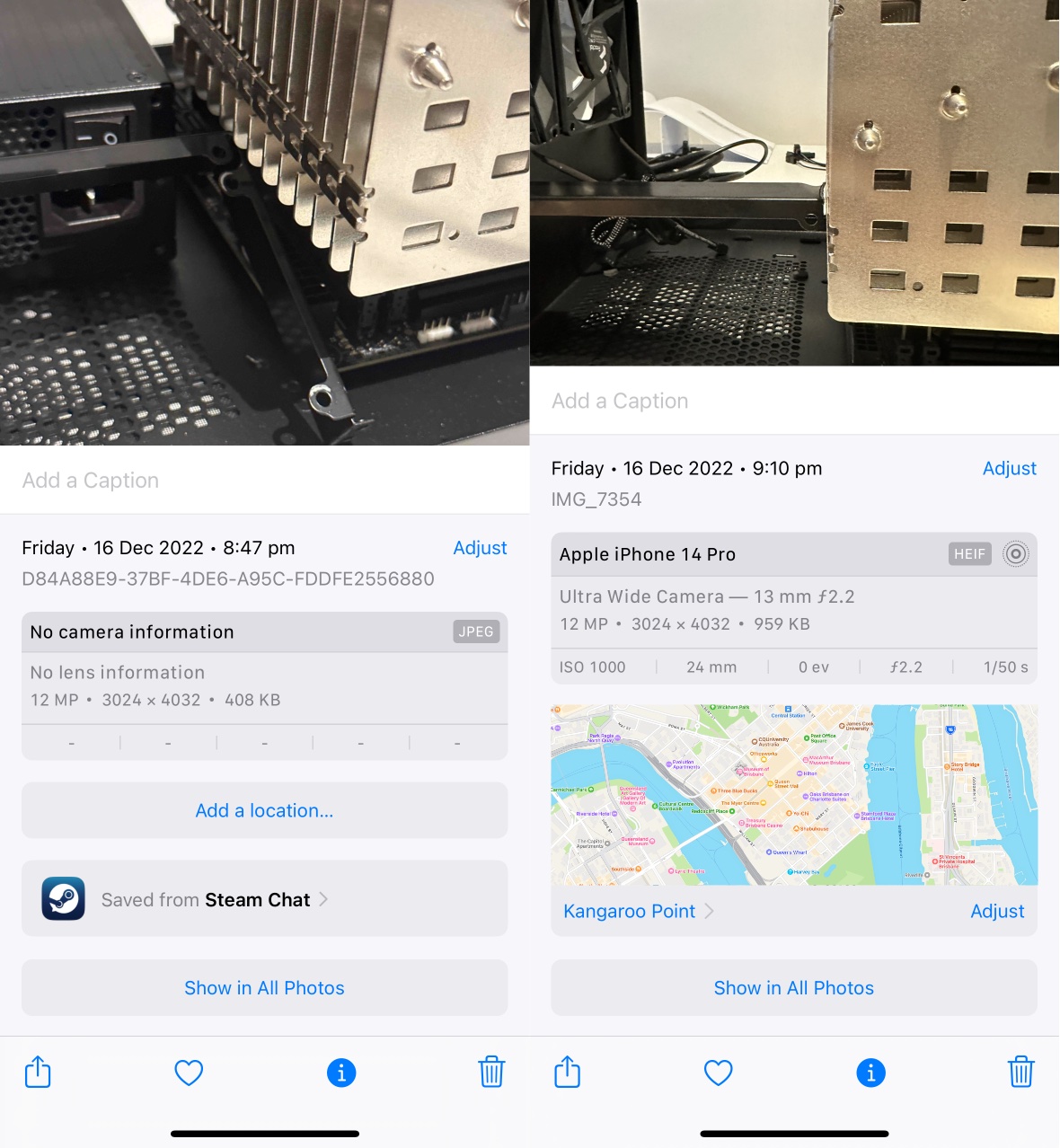

The other way you can get around this taking/selecting/sending process is by using whatever app you’re sending the photos with, to take the photos with in the first place. Most apps have an integrated camera option that allows you to take a new photo right from within the app, then send that directly. And while this is a much simpler, more streamlined process than the snap-select-send dance, it comes with its own caveats. Firstly, there’s no guarantee that these photos taken directly within apps will be saved to your camera roll by default. While some apps offer to save taken photos to your camera roll automatically, apps like Snapchat make you tap a button before the photo you’ve taken is saved to your camera roll. But when you do, these photos taken from within apps seem to miss out on some normal photo metadata, like camera/lens info, exposure, focal length, location, and they don’t seem to be able to be Live Photos or be recorded in different image formats, either.

Note differences in metadata between these two photos. Photo on the right taken using the Steam Chat app on the left, and using the stock camera on the right.

Without looking too closely at the technical details, photos taken within apps might also be missing out on some of the technical features afforded to the stock camera app, like being able to automatically switch to the ultra wide lens for the macro mode on recent Pro-model iPhones, for example, nor do you seem to get access to the camera modes and effects of the stock camera app. This means your photos when taken using apps aren’t necessarily going to be the same as ones when you take them using the stock camera app. All of this is still probably fine, if all you’re doing is taking photos for the purposes of sharing to others, but you might miss that data months or years later. There does seem to be exceptions to this, as clearly third party camera apps like Halide can capture in different formats, but I think Live Photos are a stock camera app exclusive.

So when you want to share a photo to an app that doesn’t have access to your entire location history from all the photos in your camera roll, you can either:

– do a snap-select-send dance every time you want to send a new photo using that app, keeping your photo metadata intact and ensuring the best possible photos

– take and send the photo directly from within the app, which is a much more simplified process, but results in photos with no metadata and other features that you might want later, like Live Photos, and maybe even things like depth information.

And obviously, this is only for photos. For screenshots, the situation is even worse, and you have to do the snap-select-send dance every time.

What’s the fix?

Unless I’m overlooking something here, isn’t it as simple as letting apps only access photos taken in the past one or two hours? If you’re sending a photo that you just took, it seems unlikely that you would be doing it more than say two hours after it was taken, and while a higher number would give more leeway for this, I’d be concerned about an app having a rolling 12 or 24 hour access to your photos, because then we’d just end up with the same problem that we had originally if apps have access to all your photos, but one or two hours hopefully means that apps you haven’t recently used, won’t be able to take a rolling 1-2 hour peek at your photos.

Maybe the better solution is to prevent apps from having access to photos metadata in the first place. Then none of this would be an issue, and we could go back to the time when apps had access to all photos, all the time. Selective photos metadata might not be technically possible, but that would be the ideal.